Automated provisioning and deployments with Ansible, Docker, and Bitbucket Pipelines.

In this article we will talk about automating the whole server provisioning and deployment process of an application.

Tools we will use:

- Ansible

Ansible is an open-source automation tool, or platform, used for tasks such as configuration management, application deployment, intraservice orchestration and provisioning. - Docker

Docker is a tool used to make the process of building, deploying and running the application easier, using isolated containers. - Docker compose

Docker compose is a tool which make it easier to run multiple containers at the same time, create networks based on a configuration file, docker-compose.yml - Docker swarm

Docker Swarm is a clustering and scheduling tool for Docker containers. - Bitbucket pipelines

Bitbucket pipelines is an integrated CI/CD service in Bitbucket. It allows easy testing, building, deploying based on the script that you provide in the repository. - Express

Express is a Node.JS web server that we will use to create a very simple api which returns data based on the environment it runs. - NGINX

NGINX is open source software for web serving, reverse proxying, caching, load balancing, media streaming etc… - AWS S3

Amazon Simple Storage Service (Amazon S3) is an object storage service that offers industry-leading scalability, data availability, security, and performance.

The base idea

This article will go through the steps of provisioning the servers with the needed applications, deploying the express app into different servers based on what branch it is pushed. Development branch will make a deployment in a staging server, sandbox branch in a sandbox server and master branch in the production server.

Staging and sandbox will run only a single docker container, serving the express app.

Production will have a little more scalable setup. It will use a nginx container that will load balance the traffic to multiple container nodes.

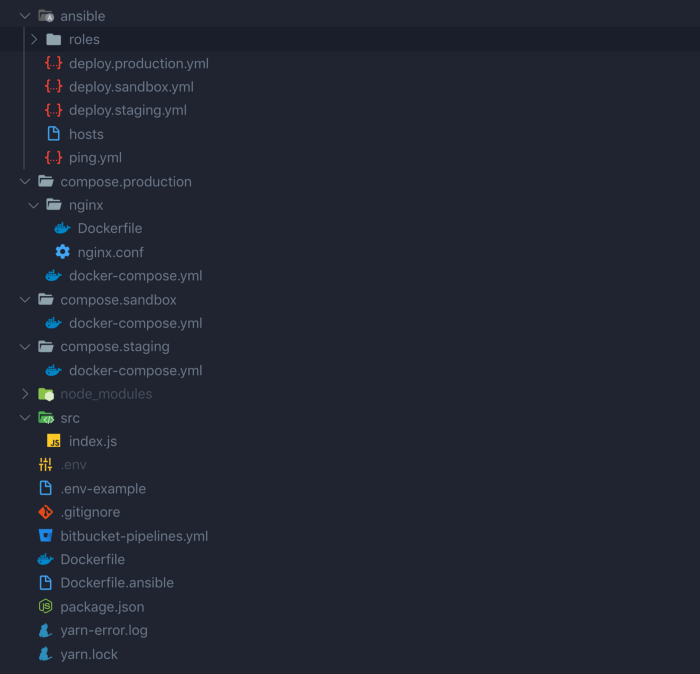

Project structure

Creating the express app

We will start by installing express, nodemon, and dotenv.yarn add express nodemon dotenv

Add the following script into package.json"start": "nodemon src/"

This will run the express server.

In the src/index.js file we will create a simple server:

Create the docker image to build the express app

Create the NGINX docker image

NGINX config file:

NGINX will use the least connections algorithm to route the requests between node app replicas.

Docker compose files

For each environment we will create 3 separate docker-compose.yml files which will be sent in the related server and run the services.

The env variables in the below snippets are provided from Ansible during the process.

Staging:

Sandbox:

Production:

As we see the production compose file is slightly different. The deploy property is used from docker swarm to create the stack with the given replicas and the other configurations like restart policy etc…

Time to dive into Ansible scripts

We will use Ansible to install Docker and configure the servers so we can run the app.

Ansible flow:

- SSH into server.

- Install Docker.

- Install Docker compose.

- Copy the directory that contains the docker-compose.yml file into the server.

- Initialise the docker services defined in the compose file.

One thing we have to do before starting the whole process is to create 3 EC2 instances in AWS with the same secret key.

We will server side encrypt the key with SSE-C method and store it in S3.

The key will be used by Ansible later to ssh into servers.

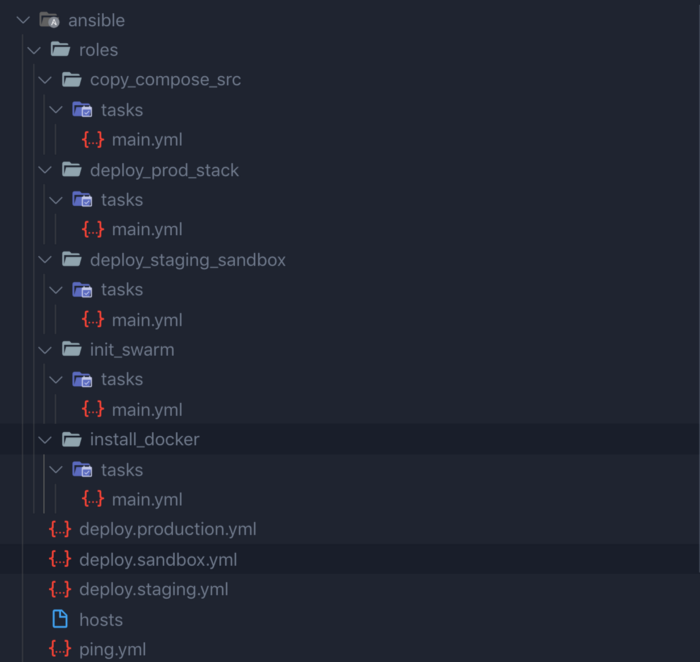

Ansible folder structure:

The main scripts or as Ansible calls them — "Playbooks", are deploy.production.yml, deploy.sandbox.yml, and deploy.staging.yml. This playbooks contain plays which define a series of tasks that will be executed in the assigned hosts.

The hosts are defined in the hosts inventory file:

Lets take a look at deploy.staging.yml:

It will target the staging servers, and execute the roles and tasks as are defined. Roles are groups of tasks, organised in separate folders so you can use them in different plays, also it makes the script more readable. The common role for all servers is the Docker and Docker compose installation, so we have made a role for that:

Every time this role is executed Ansible checks the state of the application in the server, if something has changed it will update it according to the state, else it will pass the task and execute the other one.

Other common tasks shared across the plays is the role copy_compose_src

which copies the directory that contains the docker compose file in the server, because that is all that we need to spin up the services.

For staging and sandbox the deployment logic is the same. We have a role deploy_staging_sandbox which pulls the latest images from Docker hub builds up the containers again.

In production the deployment logic defers a little bit, because we will use Docker swarm to manage our running containers, like creating multiple replicas, container restart policies if any of them is down, based on the config that we provide in the compose file.

The deploy.production.yml file is almost the same as staging and sandbox except the last task that is deploy_prod_stack and the initialisation of Docker swarm:

We also pass some other variables that we read from the repository variables like PROXY_IMAGE_NAME which is the name of our NGINX image.

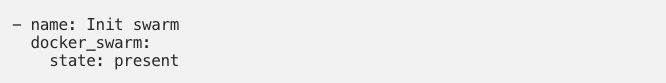

Docker swarm initialisation role:

Deployment role:

We use docker stack to use our compose file and spin up the containers in the swarm.

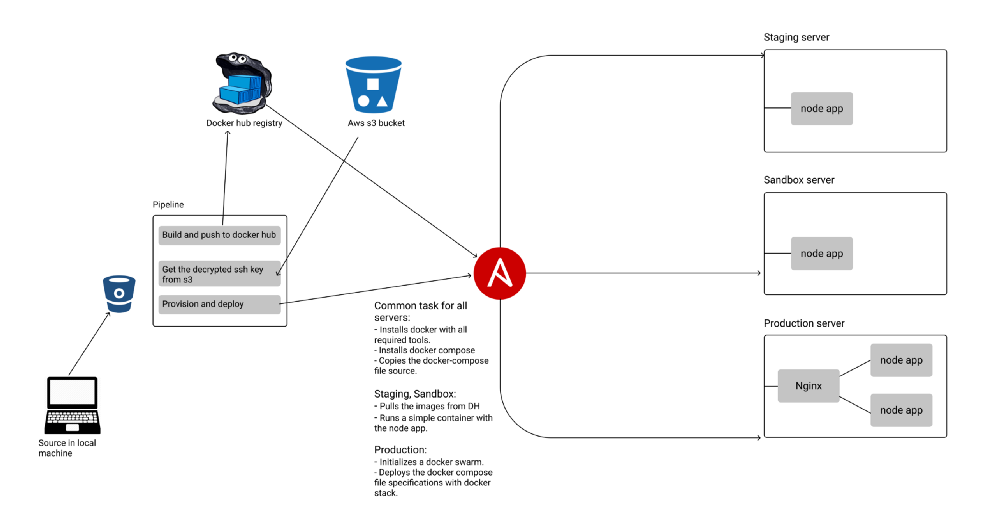

Wiring all it up with Bitbucket pipelines

So we are in the last step, wiring it all together. With a single push in the respective branches like, develop, sandbox, and master it will trigger a pipeline execution which does the following steps:

- Builds the express app image (nginx image also for production) and pushes it in Docker hub.

- Gets the decrypted secret key from aws S3 bucket.

- Executes the Ansible play book that manages all the server stuff that we said above.

In the pipeline script below you will see the whole process and commands that are used to execute playbooks etc…

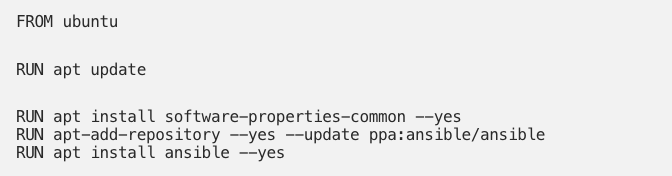

Bonus

I have also created a simple docker image with Ansible installed in it so it can be used directly by our pipeline:

Hope you enjoyed this article & see you in the next one :D